The multi-armed bandit algorithm outputs an action but doesnt use any information about the state of the environment context. He received his BS.

What Is Multi Armed Bandit Mab Testing Vwo

Multi Armed Bandit Problem Its Implementation In Python

Improving Customer Experience Using Multiarmed Bandits

Bandit algorithms are also finding their way into practical applications in industry especially in on-line platforms where data is.

Multi armed bandit problem. The non-stochastic version of cooperative multiplayer multi-armed bandit with collisions turns out to be a surprisingly challenging problem. Allen School of Computer Science Engineering at the University of Washington and is the Guestrin Endowed Professor in Artificial Intelligence and Machine Learning. A PTAS for the Bayesian Thresholding Bandit Problem Yue Qin Jian Peng Yuan Zhou AISTATS 2020.

Combinatorial Multi-Armed Bandit with General Reward Functions. The 8 queens problem is a problem in swhich we figure out a way to put 8 queens on an 88 chessboard in such a way that no queen should attack the other. In this setting all rewards drawn from an arm are independent and identically distributed.

Our adaptive restart approach to estimate t is motivated by the algorithm ofChen et al2019 for contextual multi-armed bandits with non-stationary reward distribution where. Some recent professional activities. While research in the beginning was quite meandering there is now a large community publishing hundreds of articles every year.

地址Multi-armed bandit - A Problem in which a fixed limited set of resources must be allocated between competing alternative choices in a way that maximizes their expected gain when each choices properties are only partially known at the time of allocation and may become better understood as time passes or by allocating resources to the. Combinatorial Multi-Armed Bandit and Its Extension to Probabilistically Triggered Arms. Hence we can formally.

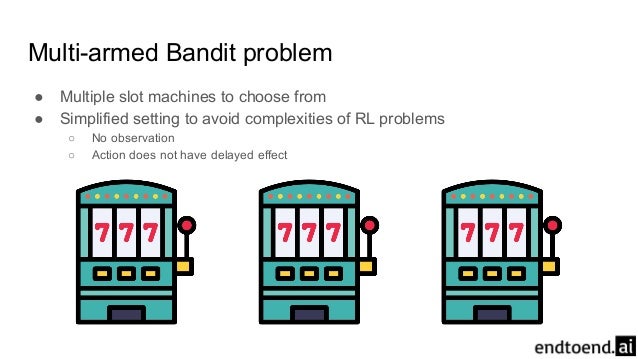

The standard k-armed bandits problem or multi-armed bandits problem is well-studied in the research literature. It features papers from most areas of mathematics with a special emphasis on directions having a strong presence in Hungarian mathematics. In the original multi-armed bandit problem discussed in Part 1 there is only a single bandit which can be thought of as like a slot-machine.

NSF CAREER CMMI-1846792 Google faculty research award 2017. Below is a list of some of the most commonly used multi-armed bandit solutions. In probability theory and machine learning the multi-armed bandit problem sometimes called the K-or N-armed bandit problem is a problem in which a fixed limited set of resources must be allocated between competing alternative choices in a way that maximizes their expected gain when each choices properties are only partially known at the time of allocation and may.

The multi-armed bandit problem with covariates. Kevin Jamieson is an Assistant Professor in the Paul G. Imagine youre at a casino and are presented with a row of k slot machines with each machine having a hidden payoff function that determines how much it.

这就是多臂赌博机问题Multi-armed bandit problem K-armed bandit problem MAB 怎么解决这个问题呢求菩萨拜赌神都不好使最好的办法是去试一试而这个试一试也不是盲目地试而是有策略地试越快越好这些策略就是bandit算法. We have an agent which we allow to choose actions and each action has a reward that is returned according to a given underlying probability distribution. The multi-armed bandit problem is often introduced via an analogy of a gambler playing slot machines.

Motivated by practical applications chiefly clinical trials we study the regret achievable for stochastic multi-armed bandits under the constraint that the employed policy must split trials into a small number of batches. 8 Wei Chen Wei Hu Fu Li Jian Li YuLiu and Pinyan Lu. Our results show that a very small number of batches gives already close to minimax optimal regret bounds and we also evaluate the number of trials in each.

For basic info about the queen in a chess game you should know that a queen can move in any direction vertically horizontally and diagonally and to any number of places. The multi-armed bandit problem. Multi-armed bandits have now been studied for nearly a century.

Imagine you are in a casino facing multiple slot machines and each is configured with an unknown probability of how likely you can get a reward at one play. The multi-armed bandit problem is a classic problem that well demonstrates the exploration vs exploitation dilemma. Considering all these conflicting parameters this problem is investigated as a budget-constrained multi-player multi-armed bandit MAB problem.

Thresholding Bandit with Optimal Aggregate Regret Chao Tao Saúl Blanco Jian Peng Yuan Zhou. Photo by Carl Raw on Unsplash. Vianney Perchet and Philippe Rigollet 2013 Journal Ann.

Multi-armed bandit problems are some of the simplest reinforcement learning RL problems to solve. In 2009 from the University of Washington under the advisement of Maya Gupta his MS. In this first paper we obtain an optimal algorithm for the model with announced collisions.

Adaptive Double Exploration Tradeoff for Outlier Detection Xiaojin Zhang Honglei Zhuang Shengyu Zhang Yuan Zhou AAAI 2020. 9 Qinshi Wang and Wei Chen. 1 Introduction The K-armed stochastic bandit model is a decision-making problem in which a learner sequentially picks an action among Kalternatives called arms and collects a random reward.

In 2010 from Columbia University under the. This is an algorithm for continuously balancing exploration with exploitation. Amazon research award 2017.

Contextual Combinatorial Cascading Bandits. There are many different solutions that computer scientists have developed to tackle the multi-armed bandit problem. 多臂赌博机问题 Multi-armed Bandit Problem 假设你面前有一排摇臂机它们的期望回报有差别显然你希望在最终博弈时尝试胜率更高的那个多臂赌博机的问题是你按压哪个臂能得到最大的回报以下分析参考1.

MAB is named after a thought experiment where a gambler has to choose among multiple slot machines with different payouts and a gamblers task is to maximize the amount of money he takes back home. Analysis of Thompson Sampling for the multi-armed bandit problem. Periodica Mathematica Hungarica founded in 1971 is the journal of the Hungarian Mathematical Society Bolyai Society.

For example if you use a multi-armed bandit to choose whether to display cat images or dog images to the user of your website youll make the same random decision even if you know something about preferences of the user. To a bandit problem with linear feedback and a locally quadratic loss function. The world chooses k rewards r1 rk 0 1.

In this paper a joint transmission power selection data-rate maximization and interference mitigation problem is addressed. Problem on synthetic agriculture data. It is regarded as a repeated game between two players with every stage consisting of the following.

The model where collisions are not announced remains wide open both from upper and lower bounds perspective. 7 Shuai Li Baoxiang Wang Shengyu Zhang and Wei Chen. We consider a multi-armed bandit problem in a setting where each arm produces a noisy reward realization which depends on an observable random covariate.

Multi Armed Bandit Mab A B Testing Sans Regret Get Social Media Tips

Multi Armed Bandit With Thompson Sampling R Bloggers

Reinforcement Learning 2 Multi Armed Bandits

The Multi Armed Bandit To Explore Or Exploit By Tom Connor 10x Curiosity Medium

Regret Analysis Of Stochastic And Nonstochastic Multi Armed Bandit Problems Sebastien Bubeck 9781601986269

The Multi Armed Bandit Problem

What Is Multi Armed Bandits Kaggle

I M A Bandit Multi Arm Bandits And The Explore Exploit Trade Off The Primary Digit